With CUDA 6 released in April 2014, we dig deep into the world of CUDA cores and what are they bringing us and what can they bring us.

What is CUDA?

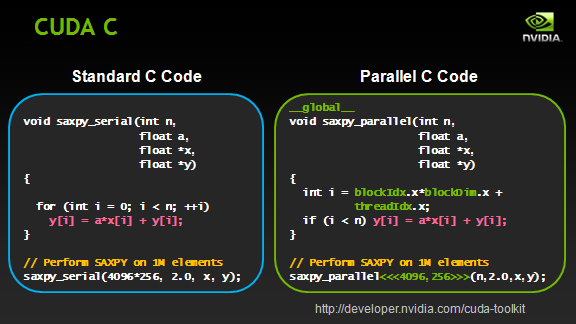

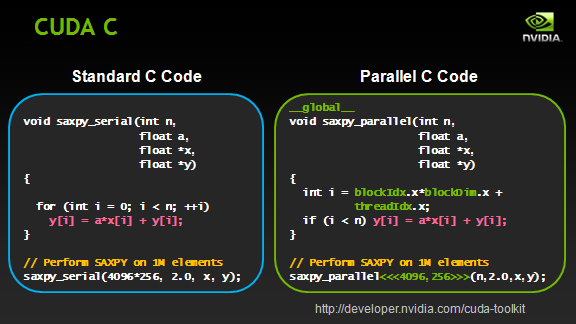

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA which was implemented or rather used by GPUs (Graphics Processing Unit) especially designed by NVIDIA. CUDA gives program developers direct access to the virtual instruction set and memory of the parallel computational elements in CUDA GPUs. CUDA can not only be used as an element which renders or produces smooth textures in elements like debris, fire, smoke, water and etc, but GPUs with CUDA technology can be used for general purpose processing i.e sometimes referred to as GPGPU.

How Does It Work?

However, you can also say that these things might have a mind of their own in doing difficult tasks. GPUs have parallel throughput to execute many threads slowly, rather than do one at a time quickly. CUDA provides a low level of API as well with high level APIs. It's available in GPUs after the G8x series. A key factor in all this is that we now have a lot of more firepower or we can say computer resources in our hands. Day by day new innovations are to be met with in the field of science, especially in the wide field of Computers.One of the critical steps for any machine learning algorithm is a step called "feature extraction," which simply means figuring out a way to describe the data using a few metrics, or "features."Because only a few of these features are used to describe the data, they need to represent the most meaningful metrics. Previously, these extracted features were hand-crafted, but now they have been replaced by the brute force of 'massive training data' which outperforms the hand-crafted expertise.

The additional cost of these computations lead the people to use GPUs. We are expecting to see higher levels of intelligence and even a new kind of intelligence. We will see many breakthroughs in these next five years. On the one hand, much larger models will be trained using much larger datasets on much bigger computers. On the other hand, these trained deep neural networks will then be deployed in many different forms, from servers at data centers, to tablets and smart phones, to wearable devices and the Internet of the Things.

No comments:

Post a Comment